Author: ICCS

Maritime operations cover a wide range of activities, from shipping to search and rescue and maritime safety. In any type of maritime operation, timely information and surveillance is of the essence. In the ever-changing environment of the open sea an object of interest (which could be anything from a vessel to an iceberg) will drift due the wind and water currents and constantly change its position and even its shape. Once an event has been reported the continued tracking of the object and the availability of constantly updated information on its position are of utmost importance.

Unmanned Aerial Vehicles (UAVs) can be used as a safe, easy to deploy and low cost (compared to manned airborne assets) solution in surveillance. At the same time computer vision and machine learning algorithms have been suggested as a means of automatically detecting and tracking objects with specific characteristics in videos or still images.

As part of the EFFECTOR project, we have developed an autonomous object detection and tracking module, carried on-board a UAV. The complete system is composed of a specially built octocopter UAV, the pilot’s station and the intelligence officer’s station. The UAV is equipped with a pair of daylight and thermal cameras providing a video stream for the UAV’s pilot, enabling Extended Visual Line-of-Sight (EVLOS) flight capability. To provide extended capabilities of object detection and tracking, a 3-axis stabilized gimbal equipped with powerful RGB and thermal cameras is fitted under the UAV and connected to the processing unit for onboard processing.

The main requirements identified for such a solution within the project were:

- Ability to detect objects;

- Ability to track the motion of detected object;

- Timely information (live transmission of data);

- Fast deployment (minimal delays when an event has been reported and needs to be verified);

- Interoperability (compliance with existing communication protocols);

- Independent and self-sufficient operation;

- Cost effectiveness.

The on-board AI module is responsible for scanning the camera feed and detect specific types of objects (such as vessels). The detected objects are assigned a unique key and they movement is consequently tracked.

The machine learning architecture used in these procedures was trained using various sources. Different dataset and model sizes were balanced to optimize results (success rate for detection and tracking) without compromising efficiency and speed of detection.

Once a vessel has been detected, the results of the onboard processing are overlaid on the Full HD video stream and transmitted to the Intelligence Officer’s workstation.

Figure: A detected vessel as it appears on the Intelligence officer's station.

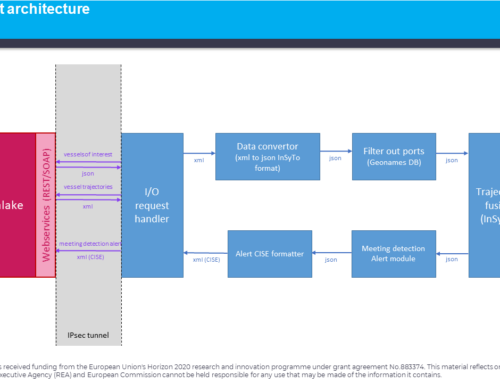

At the same time, the on-board adapters will create a message compliant with the expanded version of the Common information sharing environment (eCISE)[1] protocol (an eCISE VESSEL entity) that can be forwarded to any eCISE compatible system.

Any communication of the UAV with the operational Command and Control center (C2) is compliant with the eCISE protocol, using AIRCRAFT type entities to communicate the location and status of the UAV and TASK type entities to receive missions, as well as accept, reject or modify assigned missions based on the situations in the field.

Within the remit of the EFFECTOR project information produced by the UAV on detected and tracked vessels, the UAV’s status and location as well as communication on assigned missions will be stored in the Data Lakes holding all operational information and be available to users with access on the relevant layers (Local, Regional, National).

[1] https://ec.europa.eu/oceans-and-fisheries/ocean/blue-economy/other-sectors/common-information-sharing-environment-cise_en